How To Upload Large Files To S3 Using The Multipart Upload Feature

Upload large files using Lambda and S3 multipart upload in chunks

Iwas recently asked if it was possible to upload a large file to S3 programmatically.

The problem is that Amazon S3 has a maximum upload limit of 5GB for any file (object).

But is there a way to circumvent this limit?

There is and it is a supported feature offered by S3 called Multipart uploads.

Let’s take a look at how to create a multipart upload.

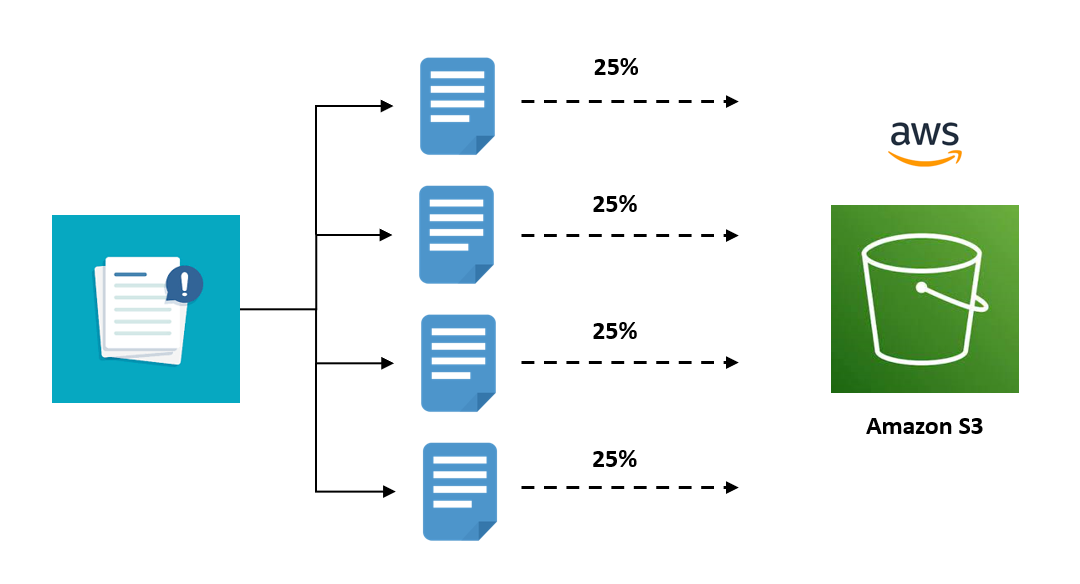

Overview

We will write some code in AWS Lambda that will break down our large file into smaller chunks, upload these chunks to S3 individually, and build them back up into one single file.

AWS Lambda Code

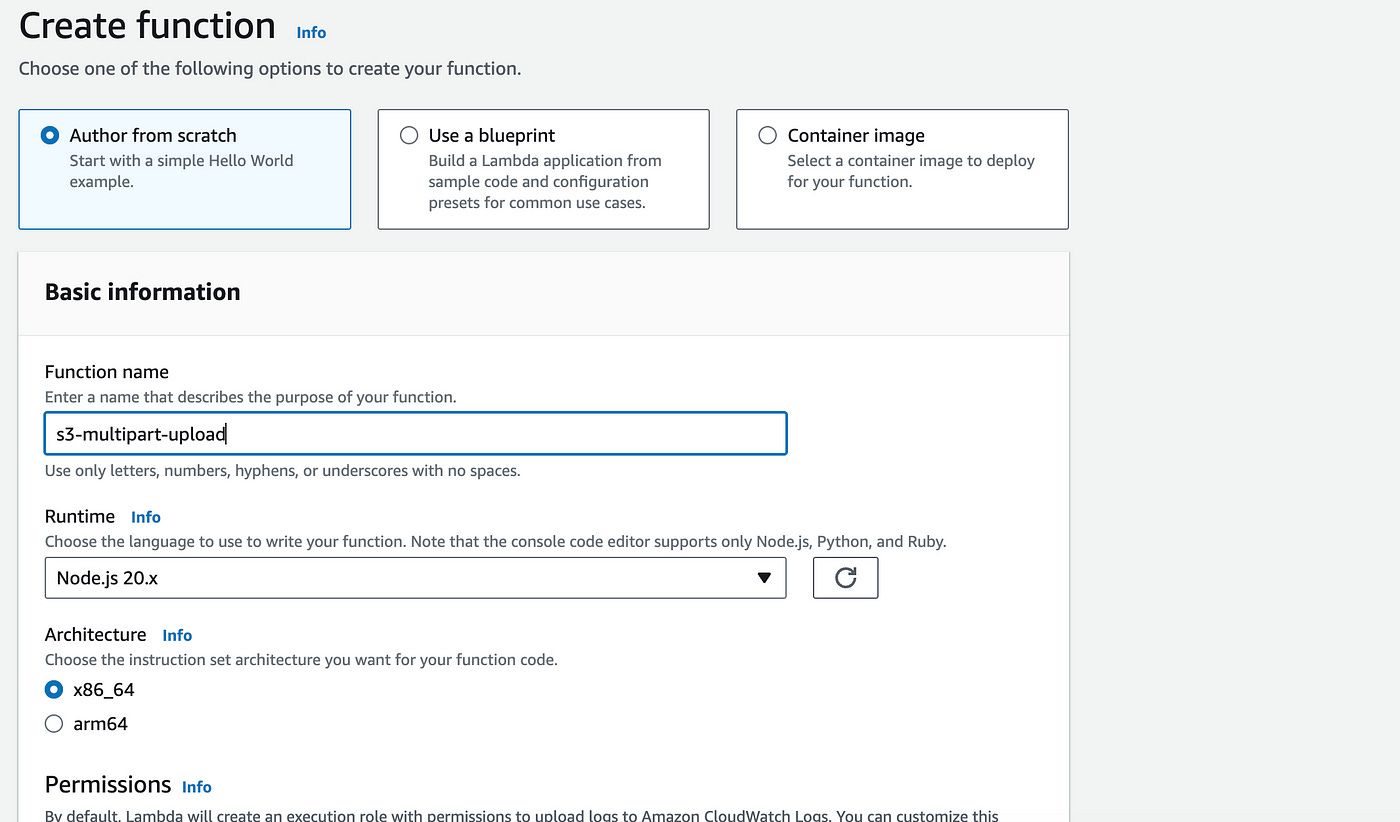

In your AWS account, head over to Lambda.

Let’s create a new function with the following configurations:

Author from scratch

Name the function: s3-multipart-upload

Choose the Node JS 20.x runtime

Add a permissions role for S3 — learn how to do this here.

Create the function and on the new page scroll down to the Code section.

Let’s break down the code into 3 steps:

Step 1

const bucketName = "my-large-upload-bucket";

const key = event.key; // the file name

const fileBuffer = event.fileBuffer; // the file buffer

// Step 1: Create a multipart upload

const createMultipartUploadCommand = new CreateMultipartUploadCommand({

Bucket: bucketName,

Key: key,

});

const multipartUpload = await s3Client.send(createMultipartUploadCommand);

const uploadId = multipartUpload.UploadId;We start by defining the bucket name we have in S3 (create it if you haven’t yet).

We define the file name and instantiate it automatically from the file that will be uploaded. We do the same for the file buffer.

We then use the CreateMultipartUploadCommand from the S3 API to create a multi-part upload. We send the command to S3 and retrieve the uploadId.

Step 2

// Step 2: Upload parts

const partSize = 100 * 1024 * 1024; // 100MB

const parts = [];

for (let i = 0; i < fileBuffer.length; i += partSize) {

const partBuffer = fileBuffer.slice(i, i + partSize);

const uploadPartCommand = new UploadPartCommand({

Bucket: bucketName,

Key: key,

PartNumber: i / partSize + 1,

UploadId: uploadId,

Body: partBuffer,

});

const part = await s3Client.send(uploadPartCommand);

parts.push({ ETag: part.ETag, PartNumber: part.PartNumber });

}In the next step, we define the part size of each part that will be uploaded into 100MB chunks.

We loop over the chunks. In the for loop, we extract the current chunk from the file buffer and upload it as one part.

Step 3

// Step 3: Complete the multipart upload

const completeMultipartUploadCommand = new CompleteMultipartUploadCommand({

Bucket: bucketName,

Key: key,

UploadId: uploadId,

MultipartUpload: { Parts: parts },

});

await s3Client.send(completeMultipartUploadCommand);

return { statusCode: 200 };In the last step, we send the upload command with all the individual parts we uploaded in step 2.

Full Code

Here is the full code (with imports of the AWS SDK) to copy into the Lambda function:

const { S3Client, CreateMultipartUploadCommand, UploadPartCommand, CompleteMultipartUploadCommand } = require("@aws-sdk/client-s3");

const s3Client = new S3Client({ region: "us-east-1"}); //use your own region

exports.handler = async (event) => {

const bucketName = "my-large-upload-bucket";

const key = event.key; // the file name

const fileBuffer = event.fileBuffer; // the file buffer

// Step 1: Create a multipart upload

const createMultipartUploadCommand = new CreateMultipartUploadCommand({

Bucket: bucketName,

Key: key,

});

const multipartUpload = await s3Client.send(createMultipartUploadCommand);

const uploadId = multipartUpload.UploadId;

// Step 2: Upload parts

const partSize = 100 * 1024 * 1024; // 100MB

const parts = [];

for (let i = 0; i < fileBuffer.length; i += partSize) {

const partBuffer = fileBuffer.slice(i, i + partSize);

const uploadPartCommand = new UploadPartCommand({

Bucket: bucketName,

Key: key,

PartNumber: i / partSize + 1,

UploadId: uploadId,

Body: partBuffer,

});

const part = await s3Client.send(uploadPartCommand);

parts.push({ ETag: part.ETag, PartNumber: part.PartNumber });

}

// Step 3: Complete the multipart upload

const completeMultipartUploadCommand = new CompleteMultipartUploadCommand({

Bucket: bucketName,

Key: key,

UploadId: uploadId,

MultipartUpload: { Parts: parts },

});

await s3Client.send(completeMultipartUploadCommand);

return { statusCode: 200 };

};Click on Deploy to save and deploy the function.

Testing Our Code

We are now ready to test our code with an upload.

To test this, I recommend changing two things.

First, use a smaller file of around a few dozen MB in size, for a faster test. Then change the partSize variable to a smaller value:

const partSize = 5 * 1024 * 1024; //5MB(note that all file uploads to Amazon S3 are free — you are only charged for reads out of S3).

Create a file upload feature using JavaScript on the frontend:

const fileInput = document.getElementById('fileInput');

const uploadButton = document.getElementById('uploadButton');

uploadButton.addEventListener('click', async () => {

const file = fileInput.files[0];

const fileName = file.name;

const fileBuffer = await file.arrayBuffer();

const data = {

key: fileName,

fileBuffer: fileBuffer,

};

const response = await fetch('https://your-lambda-function-url.com/upload', {

method: 'POST',

body: JSON.stringify(data),

headers: {

'Content-Type': 'application/json',

},

});

const result = await response.json();

console.log(result);

});To get an endpoint URL to call your Lambda function, check out this blog post.

Conclusion

We explored how to upload large files to Amazon S3 programmatically using the Multipart Upload feature, which allows us to break down files into smaller chunks and upload them individually.

Creating this pipeline is a simple 3-step process:

breaking down a large file into smaller chunks.

uploading these chunks to S3 individually.

building them back up into one single file.

With this process, we can upload files larger than the 5GB limit imposed by S3.

👋 My name is Uriel Bitton and I’m committed to helping you master Serverless, Cloud Computing, and AWS.

🚀 If you want to learn how to build serverless, scalable, and resilient applications, you can subscribe to my blog:

https://medium.com/@atomicsdigital/subscribe

Thanks for reading and see you in the next one!